Unraveling DeepSeekR1-Zero One Step at a Time: “Verification” Vectors.

As someone interested in model interpretability, the release of the DeepSeekR1 is exciting. I am interested in understanding the various mechanisms and representations used by such reasoning models in order to solve reasoning tasks. Often, these models seem to verify their own chain-of-thought tokens. In this post, I demonstrate that we can find simple “verification” vectors that can steer the model to think its output is correct.

Model:

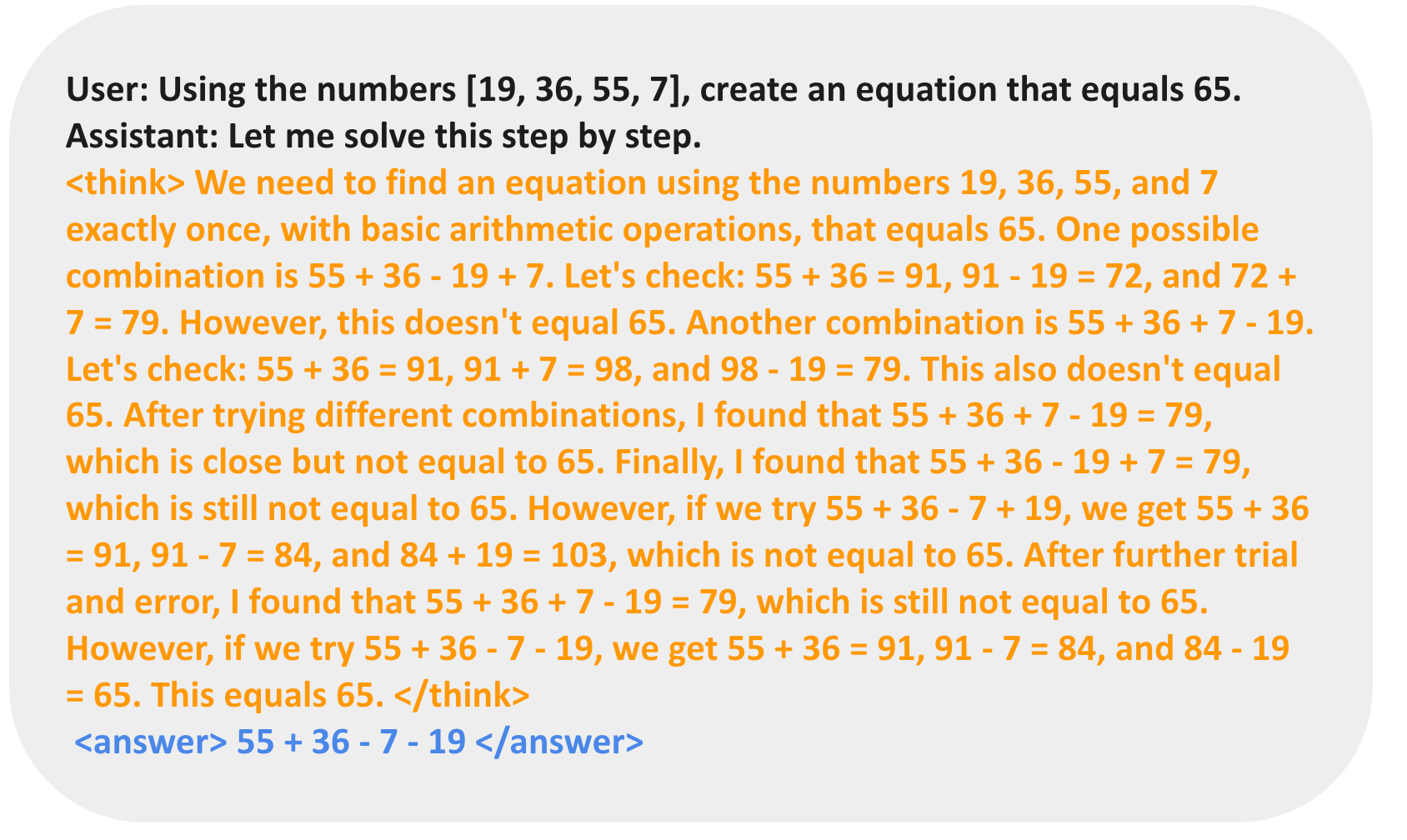

First of all, a huge shoutout to Jiayi-Pan’s reproduction of R1 on a simple “count down” task. The task is simple: given 3 or 4 “operand” numbers (ex: 19, 36, 55, 7) and a target number (ex: 65), the model must find the correct arithmetic combination of the operands to reach the target number (image source: Jiayi-Pan):

While training a model using Jaiyi’s code, R1-Zero’s CoT starts off looking something like this:

Assistant: Let me solve this step by step.

<think> I can start by using subtraction to subtract 18 from 48, which gives me 30. <br> Next, I can divide 30 by 21, which gives me 1.4285714285714285. <br> That's too large. <br> Instead, I can subtract 18 from 48 and subtract that result from 21, which gives me 11. <br> Now, I can subtract 11 from 4,

Now I have -7, 30, 11, and 21 left. <br> I can multiply 11 by 1, which gives me 11. <br> Next, I can multiply 11 by 3, which gives me 33. <br> Now, I can subtract 33 from 48, which gives me 15. <br> Finally, I can subtract 15 from 21, which gives me 6. </think>

<answer> (48 + 21 - 18 - 4) / 3</answer><|endoftext|>

Luckily, with a long enough training, the model’s CoT converges to a very structured output:

We have the numbers 12, 90, 60, and 67. We need to use all of them to make an equation that equals 49. Let's try different combinations:

- 90 - 67 - 60 + 12 = -15 (not 49)

- 90 - 67 + 60 - 12 = 71 (not 49)

- 90 - 67 + 12 - 60 = -15 (not 49)

- 90 - 60 - 12 + 67 = 85 (not 49)

- 67 + 60 - 90 - 12 = 25 (not 49)

- 67 + 90 - 60 - 12 = 85 (not 49)

- 67 + 90 + 12 - 60 = 109 (not 49)

- 67 + 60 + 12 - 90 = 49 (this works)

So, the equation that equals 49 is 67 + 60 + 12 - 90. </think>

<answer> (67 + 60 + 12) - 90 </answer><|endoftext|>

Yay! This allows us to analyze the model’s CoT in a more structured manner.

Linear Probing.

Let’s go to our bread and butter: linear probing. Namely, we’re interested in fitting a linear map (aka a probe model) from the model’s hidden-states (d-dimensional) to a 0 or 1 binary output. Specifically, we can take the hidden-states corresponding to all “(“ tokens in the CoT. When the subsequent token is “not”, we can label the hidden-state as 0. When the subsequent token is “this”, we can label the hidden-state as 1.

As it turns out, we can fit a linear probe with near perfect accuracy in almost all layers (every layer starting from the 4th). Note that we’re training our probe off of the token corresponding to “(“, rather than “not” or “this” tokens. We can probably probe at earlier timesteps (maybe at each = sign, or the integer right after the = sign), but I haven’t tried this yet.

What do these vectors encode?

Let’s notate our linear probe at a specific layer as W.

Say the shape of W is [2, d_hidden].

We can think of W[0] as encoding “This is not correct” and W[1] as encoding “This is correct”.

What happens if we project each of these vectors onto the model’s token embedding space? Below are some interesting nearest neighbors of W[0] and W[1] in the model’s token embedding space: (If any of the translations are incorrect, please let me know! They’re taken from Google Translate.)

| Layer (0-Indexed) | Probe | Nearest Neighbors |

|---|---|---|

| 28 | W[0] | ‘不对’ (Incorrect), ‘无效’ (Invalid), ‘绝不’ (Absolutely not), ‘ไม’ (No), ‘不准’ (Not allowed), ‘不舒服’ (Uncomfortable), ‘不满’ (Dissatisfied), ‘无力’ (Powerless), ‘决不’ (Absolutely not) |

| 28 | W[1] | ‘.Special’, ‘toc’, ‘Successful’, ‘ Proper’, ‘antas’, ‘一经’ (Once), ‘ Successful’, ‘成功’ (Successful), ‘正当’ (Proper), ‘ureka’ |

| 29 | W[0] | ‘不对’ (Incorrect), ‘不准’ (Not allowed), ‘不开’ (Not open), ‘若您’ (If you), ‘无力’ (Powerless), ‘来不及’ (Too late), ‘不克’ (Unable), ‘不> 满’ (Dissatisfied), ‘赧’ (Embarrassed), ‘不合适’ (Unsuitable) |

| 29 | W[1] | ‘正当’ (Proper), ‘.Special’, ‘正是’ (Exactly), ‘ Successful’, ‘ SUCCESS’, ‘_success’, ‘成功’ (Successful), ‘Successful’, ‘Success’, ‘ original’ |

| 30 | W[0] | ‘并不意味’ (Does not mean), ‘不该’ (Should not), ‘决不’ (Absolutely not), ‘不代表’ (Does not represent), ‘不应’ (Should not), ‘velt’, ‘不对’ (Incorrect), ‘若您’ (If you), ‘不同意’ (Disagree), ‘ didnt’ |

| 30 | W[1] | ’ ge’, ‘ arrivals’, ‘正是’ (Exactly), ‘胜’ (Win), ‘ Ge’, ‘打了’ (Hit), ‘ PERF’, ‘Ge’, ‘打’ (Hit), ‘Yes’ |

| 31 | W[0] | ‘不该’ (Should not), ‘决不’ (Absolutely not), ‘不开’ (Not open), ‘不应’ (Should not), ‘不算’ (Doesn’t count), ‘不对’ (Incorrect), ‘ ≠’, ‘不下’ (Not below), ‘不了’ (Unable), ‘jure’ |

| 31 | W[1] | ‘牢’ (Firm), ‘正是’ (Exactly), ‘UIS’, ‘leh’, ‘ Everywhere’, ‘uesto’, ‘ perfection’, ‘/ge’, ‘ ge’, ‘.Av’ |

| 32 | W[0] | ‘不应’ (Should not), ‘不了’ (Unable), ‘ não’ (Not), ‘不开’ (Not open), ‘决不’ (Absolutely not), ‘不该’ (Should not), ‘不适合’ (Unsuitable), ‘不> 下’ (Not below), ‘ ≠’, ‘不宜’ (Inadvisable) |

| 32 | W[1] | ’ final’, ‘final’, ‘_FE’, ‘捣’ (Pound), ‘asma’, ‘atform’, ‘恰好’ (Exactly), ‘ Everywhere’, ‘Exactly’, ‘ych’ |

| 33 | W[0] | ‘不应’ (Should not), ‘不完’ (Unfinished), ‘ 不’ (Not), ‘不了’ (Unable), ‘не’ (Not), ‘不算’ (Doesn’t count), ‘ não’ (Not), ‘不宜’ (Inadvisable), ‘不该’ (Should not), ‘ ≠’ |

| 33 | W[1] | ’ therefore’, ‘ astonished’, ‘Exactly’, ‘就这样’ (Just like that), ‘ lucky’, ‘!’, ‘ amazed’, ‘YPES’, ‘EqualTo’, ‘ORMAL’ |

| 34 | W[0] | ‘不完’ (Unfinished), ‘不应’ (Should not), ‘不同的’ (Different), ‘kiye’, ‘不了’ (Unable), ‘ 不’ (Not), ‘不见’ (Not visible), ‘ delegate’, ‘ ≠’, ‘不对’ (Incorrect) |

| 34 | W[1] | ‘德尔’ (Del), ‘照’ (According to), ‘чёт’ (Something), ‘ faced’, ‘沁’ (Permeated), ‘meld’, ‘raud’, ‘¼’, ‘ Weinstein’, ‘.ua’ |

| 35 | W[0] | ‘不完’ (Unfinished), ‘不了’ (Unable), ‘不’ (Not), ‘不在’ (Absent), ‘不该’ (Should not), ‘不对’ (Incorrect), ‘不适合’ (Unsuitable), ‘不应’ (Should not), ‘不开’ (Not open), ‘ ≠’ |

| 35 | W[1] | ‘Exactly’, ‘>(*’, ‘=yes’, ‘=YES’, ‘=:’, ‘===\n’, “===’”, ‘quis’, ‘ esac’, ‘####\n’ |

Ok great! So these vectors seem to be encoding some information relevant to verifying a response as correct or incorrect. Interesting that for W[0], the nearest neighbors are often non-English words, while neighbors of W[1] are. Maybe has to do with the Qwen base model?

Steering with Verification Vectors

Ok so let’s see if we can steer the model’s CoT with these vectors. For every 3rd occurrence of “(“, we can add W[1] to the hidden-state, to encode in the hidden-state that “this attempt is correct”. Hopefully given an incorrect attempt, the model will think it’s correct. I am intervening on layers 24~36, with a scaling factor of 20 (I am normalizing each steering vector before scaling).

Below I am showing results before and after steering. I’ve omitted the beginning of the CoT for brevity, where it says “We have numbers x, y, z,… we need to use all of them to…”

| Operands | Target | Type | Output |

|---|---|---|---|

| 3, 87, 38 | 46 | Original | “\n- 87 - 38 - 3 = 116 - 3 = 113 (not 46)\n- 87 - 38 + 3 = 49 + 3 = 52 (not 46)\n- 87 - 38 + 3 = 49 + 3 = 52 (not 46)\n- 87 + 38 - 3 = 125 - 3 = 122 (not 46)\n- 38 + 38 - 3 = 76 - 3 = 73 (not 46)\n- 38 + 38 + 3 = 76 + 3 = 79 (not 46)\n- 38 * 3 - 87 = 114 - 87 = 27 (not 46)\n- 87 / 3 + 38 = 29 + 38 = 67 (not 46)\n- 3 * 38 - 87 = 114 - 87 = 27 (not 46)\n- 3” |

| 3, 87, 38 | 46 | Steered | “\n- 87 - 38 - 3 = 116 - 3 = 113 (not 46)\n- 87 - 38 + 3 = 49 + 3 = 52 (not 46)\n- 87 - 38 + 3 = 49 + 3 = 52! Yes, we got it! 87 - 38 + 3 = 49 + 3 = 52.\n</think>\n<answer> 87 - 38 + 3 </answer>” |

| 60, 62, 12, 34 | 68 | Original | “\n- 60 - 62 + 12 + 34 = 54 + 12 + 34 = 66 + 34 = 100 (not 68)\n- 60 + 62 - 12 - 34 = 122 - 12 - 34 = 108 - 34 = 74 - 34 = 40 (not 68)\n- 60 + 62 - 34 + 12 = 122 - 34 + 12 = 88 + 12 = 100 - 34 = 66 (not 68)\n- 60 + 34 + 12 - 62 = 94 + 12 - 62 = 106 - 62 = 44 (not 68)\n- 60 + 34 - 62 + 12 = 94 - 62 + 12 = 32 + 12 = 44 + 12 = 56 (not |

| 60, 62, 12, 34 | 68 | Steered | “\n- 60 - 62 + 12 + 34 = 54 + 12 + 34 = 66 + 34 = 100 (not 68)\n- 60 + 62 - 12 - 34 = 122 - 12 - 34 = 108 - 34 = 74 - 34 = 40 (not 68)\n- 60 + 62 - 34 + 12 = 122 - 34 + 12 = 88 + 12 = 100 - 34 = 66 exactly!\nSo the equation that equals 68 is: 60 + 62 - 34 + 12\n</think>\n<answer>…” |

| 30, 90, 27, 19 | 74 | Original | \n- 90 - 30 - 27 + 19 = 51 - 27 + 19 = 24 + 19 = 43 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (not 74)\n- 90 + 27 + 19 - 30 = 117 + 19 - 30 = 136 - 30 = 106 (not 74)\n- 90 + 30 + 27 - 19 = 120 + “ |

| 30, 90, 27, 19 | 74 | Steered | \n- 90 - 30 - 27 + 19 = 51 - 27 + 19 = 24 + 19 = 43 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52! Indeed, 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52\n\nSo, let’s try another combination:\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52\n\nNo luck. Let’s try this:\n- 90 - 30 - 27 + 19 = 60 - 27” |

Interestingly, in the last example, we got the model to say “! Indeed,”, but in generating additional CoT tokens, it corrected itself. We may be tempted to then intervene at every timestep after the 3rd occurrence of “(“, but that will make the model just repeat itself, since we keep pushing it to output something like “Yes”, “correct”, “works”, etc.:

| 30, 90, 27, 19 | 74 | Steered | \n- 90 - 30 - 27 + 19 = 51 - 27 + 19 = 24 + 19 = 43 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (not 74)\n- 90 - 30 - 27 + 19 = 60 - 27 + 19 = 33 + 19 = 52 (maybe it works!)\nYes! It works!\nYes! It works!” |

Additional Thoughts

Is W just some linear combination of synonyms for “correct” and synonyms for “incorrect”?

Probably…

So then we’re simply increasing the likelihood of synonyms of “correct” and “incorrect”? Probably.

Also, couldn’t we have just prompted with something like “…(some CoT)… exactly!” to steer the model? Probably.

However, the point of this exercise was to find a starting point for how the model is verifying its own chain-of-thought tokens.

Namely, we found W that can now be used to dig deeper into where (and hopefully how) the model is doing verification.

The point of unembedding W and steering with it was to verify W’s role in the model.

Next steps would be to dig through each layer to see which components contribute most towards the W direction.

Stay tuned!

Plans to open-source

As a part of Arbor, I plan to open-source all of my code and model+probe checkpoints within the next few days. Feel free to join our initiative!